Understanding How Chat GPT Can Be Exploited: A Comprehensive Guide to AI Cyber Risk

Last Updated on February 25, 2024 by Alex Rutherford

In the digital age, AI models like Chat GPT have revolutionized how we interact online. But have you ever wondered what it takes to hack a chat GPT? While I don’t condone illegal activities, understanding how these systems work is crucial for cybersecurity.

In this article, I’ll demystify the complex world of AI and chatbots. We’ll explore how they function, their vulnerabilities, and how hackers might exploit them. Remember, knowledge is power. The more we understand, the better we can protect our digital assets.

So, if you’re curious about the inner workings of chat GPTs, or you’re just a tech enthusiast wanting to learn more, you’ve come to the right place. Let’s dive into the fascinating world of AI and cybersecurity.

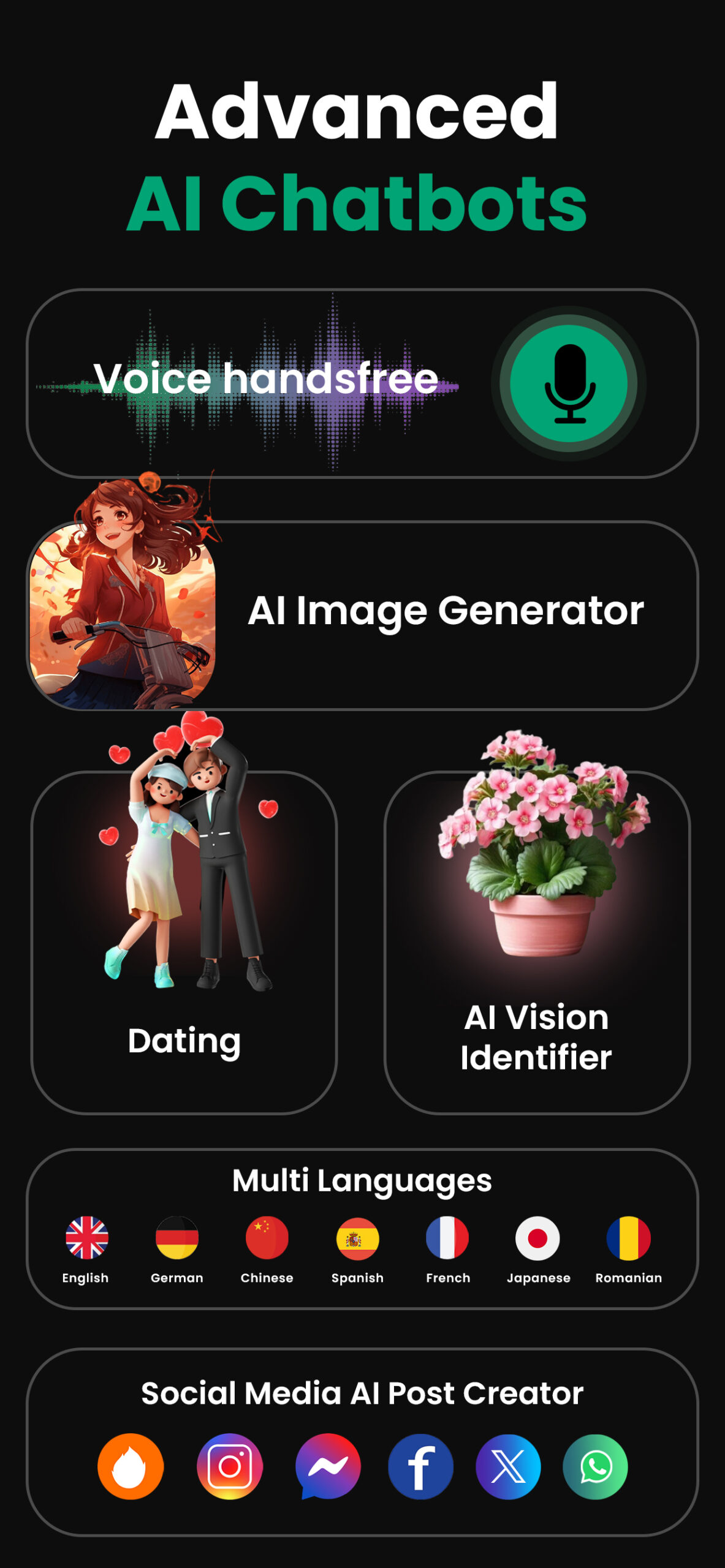

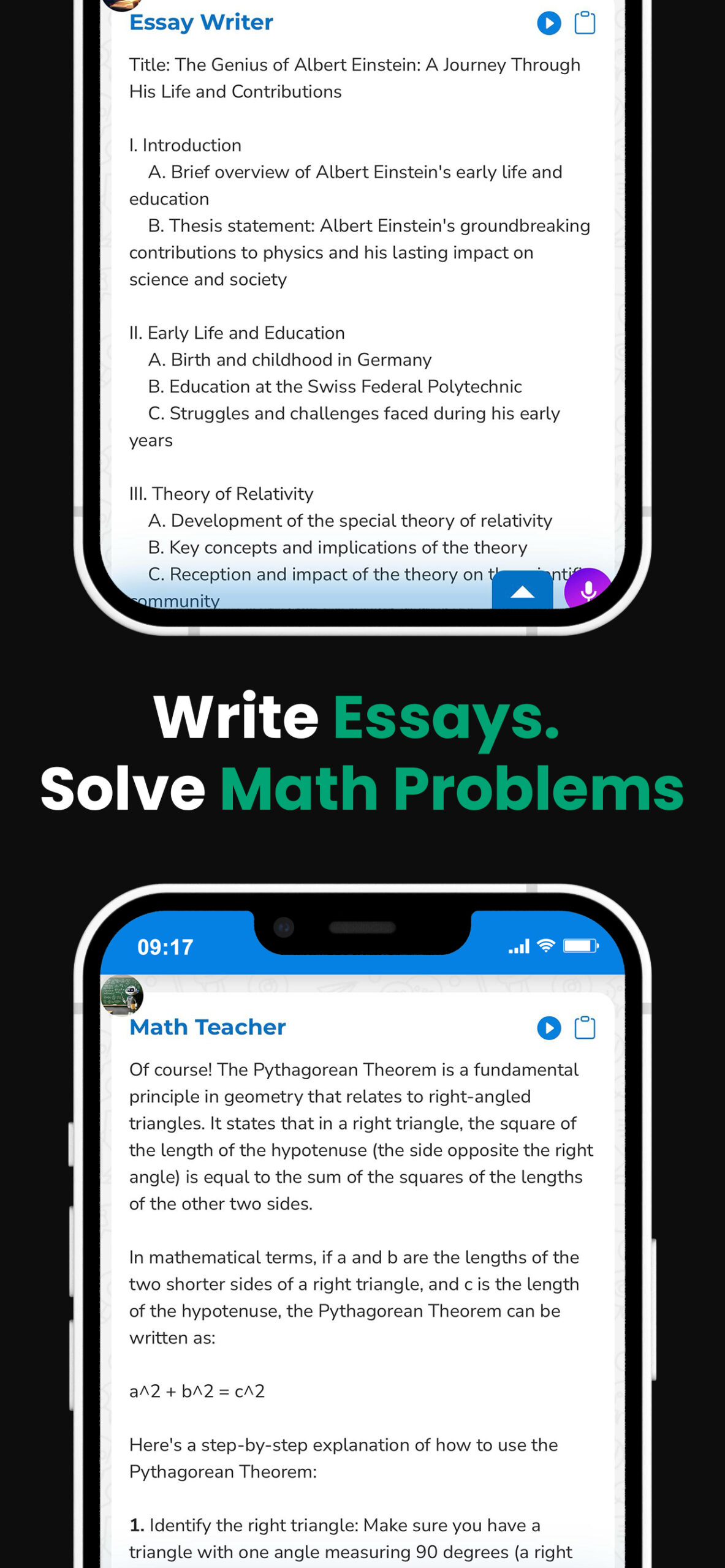

PowerBrain AI Chat App powered by ChatGPT & GPT-4

Download iOS: AI Chat

Download Android: AI Chat

Read more on our post about ChatGPT Apps & AI Chat

Key Takeaways

- Chat GPT, an AI chatbot developed by OpenAI, has transformed online interactions with its human-like text generation. It utilizes a machine learning technique called Transformer and learns through unsupervised learning, identifying patterns and context to produce replicable discourse.

- Although Chat GPT’s pattern recognition abilities make it powerful, they also expose it to potential cybersecurity threats. Manipulation of AI chat systems by hackers can lead to misinformation, identity theft, and data breaches.

- Both AI models like Chat GPT and AI-powered chatbots analyze user inputs and generate responses, but they also have vulnerabilities. They can produce inappropriate content and become tools for spreading misinformation if manipulated by hackers.

- Chat GPT systems have multiple functions including human-like text generation, conversational engagement, task completion, and improving through interactions with users. However, these abilities can also be exploited for malicious activities.

- Although Chat GPT systems are technologically advanced, they are not immune to failures. Vulnerabilities include spreading misinformation, the risk of identity theft, data breaches, and being manipulated to produce inappropriate or offensive content.

- Chat GPT can potentially be exploited for cyber attacks. Hackers might use it for information theft, creating convincing phishing messages, miming personalities, and spreading misinformation. With increasing cybersecurity threats, continuous improvements in defensive strategies are necessary.

Exploring the World of Chat GPT

In the realm of AI and chatbots, Chat GPT holds an intriguing spot. It’s not just another AI model; it’s a language prediction model, a game-changer in terms of online interactions.

Developed by OpenAI, Chat GPT utilizes a machine learning technique called Transformer. This technique empowers the model to generate human-like text, providing valuable insights, answering questions and even crafting poetry. Impressive, right?

But, how does it work exactly? Chat GPT employs what’s known as unsupervised learning. Simply put, it’s trained by absorbing vast numbers of sentences and phrases online. It observes patterns and connections among words, grasps the context, and subsequently generates replicable discourse. All these attributes are the backbone of its remarkable conversational ability.

Yet, with immense power, comes inherent vulnerabilities. The same pattern recognition ability that enables Chat GPT to mirror our language so effectively also leaves it prone to exploitation. Cybersecurity threats are a constant risk. Sophisticated hackers could potentially manipulate AI chat systems, leading to misinformation spread, identity theft, or even data breaches.

Understanding these models deep down to their functional and computational level is not a luxury anymore; it’s a necessity. It’s crucial to unveil the vulnerabilities of these AI systems, particularly as we increasingly integrate them into our digital lives.

The world of Chat GPT is vast, complex, and brimming with both potential and hazards. Delving into its intricacies offers a glimpse into the pioneering frontiers of AI, a prospect both thrilling and cautionary.

Next, let’s turn our attention to the core of this discourse: hacking a chat GPT model. What would it entail and why is it worth discussing? Stay tuned to uncover the answers.

Understanding AI Models and Chatbots

Let’s take a deep dive into understanding AI models and chatbots. AI models like Chat GPT use machine learning algorithms called transformers, powering their ability to generate human-like text. They don’t rely on preprogrammed responses; instead, they learn from billions of lines of text data fed into them.

Machine learning is a vital component of these AI models. Through unsupervised learning, these models uncover patterns in text data, enabling fluid text generation that mimics human conversation convincingly. However, it’s in their unlimited learning and text generation ability that vulnerability creeps in. When left unchecked, AI models can produce inappropriate, offensive, or even false information.

Chatbots, on the other hand, can function using AI technology or simpler coded instructions. AI-powered chatbots utilize similar principles as AI models. They analyze user inputs, understand the context, and deliver a natural language response. Whereas, conventional chatbots often use predefined scripts. They respond based on keywords in the user query, making them less sophisticated but easier to control.

Understanding these technologies highlights the inherent risks that such AI systems could face. For instance, a hacker could potentially feed an AI model with misleading information, a security concern that can lead to misinformation spreading. Moreover, these technologies are susceptible to malicious exploitation for identity theft or unauthorized access to sensitive information.

I’ve painted a clear picture of the intricate technologies behind AI models and chatbots, and pointed out the risks these systems might face. Recognizing these issues is the first step in addressing them, as it enables us to bring effective solutions to the table and ensure the safe integration of AI in our digital lives.

I must stress, as impressive as it is to marvel at this AI-powered linguistic prowess, understanding its complexities and vulnerabilities is crucial. It’s a transformative technology, yes. But let’s not forget that cybersecurity should be an integral part of any AI development project.

Functions of Chat GPT Systems

As we continue to explore the intricacies of AI models and chatbots, it’s essential to delve into the core mechanisms underpinning their functionality. Chat Generative Pretrained Transformer (GPT) systems feature prominently in this context.

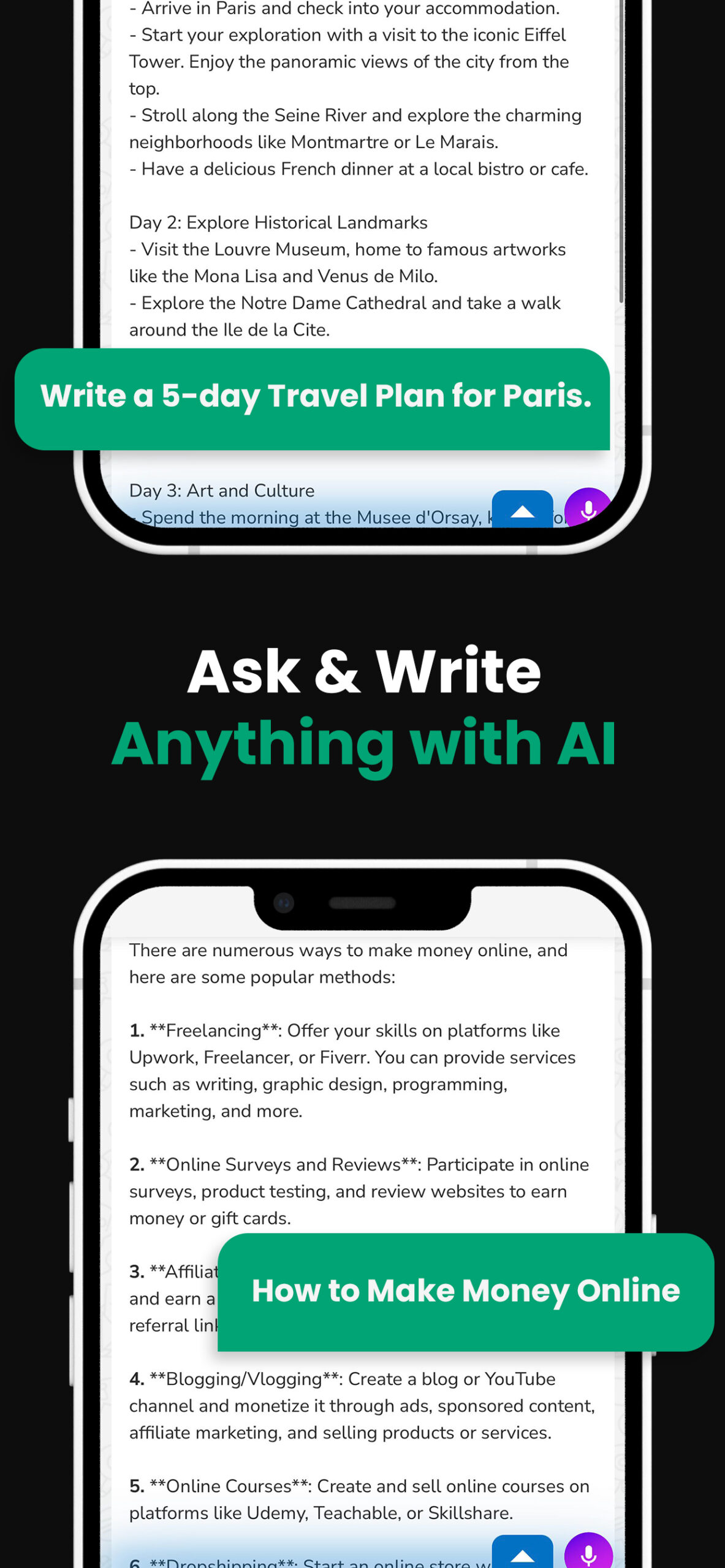

One of the fundamental roles of such tools is text generation. Building on sophisticated machine learning architectures, these models can generate human-like text that is contextually relevant and convincingly real. By training on massive datasets of text, chat GPT models learn stylistic nuances, sentence structures, and semantic connections that shape their output.

Another primary function of GPT chat systems is their conversational engagement. These intelligent systems are designed to generate responses that keep the conversation going. It’s not just about understanding the prompt and generating a relevant reply; it’s about maintaining the flow, anticipating reader expectations, ensuring stylistic continuity, and producing a dialogue that’s engaging.

Furthermore, GPT systems are excellent at task completion. Whether it’s setting a reminder, answering a query, booking an appointment, or sending a message, GPT systems execute tasks comprehensively and efficiently.

Finally, let’s not forget about improvisation. As GPT models interact with users, they continuously learn and improve, fine-tuning responses based on the feedback they receive.

So, while GPT systems may seem complex and inscrutable, at their core, they’re all about generating human-like text, engaging readers, executing tasks efficiently, and improving over time.

Now you may ask — are these AI technologies foolproof? While GPT systems are indeed sophisticated, they’re not immune to weaknesses. The subsequent sections will address these vulnerabilities — particularly around misinformation, identity theft, and data breaches — equipping us with the knowledge to secure AI systems and prevent them from falling into the wrong hands.

Vulnerabilities in Chat GPT Systems

It’s crucial to understand that, although Chat GPT systems are impressive, they’re far from infallible. Certain vulnerabilities can significantly risk their use.

One major concern revolves around the spread of misinformation. The efficacy of GPT systems in text generation often creates a convincing illusion of accuracy. But, the fact remains: the generated text is largely influenced by data it’s been trained with. Bias or inaccuracies in the data mean the same issues can be reflected in the AI’s output, leading to potential misinformation spread.

Let’s not forget the risk of identity theft. Chat GPT systems don’t inherently discern between sensitive and non-sensitive information. As a result, it might inadvertently generate content that could reveal a user’s personal information. In the wrong hands, this could pose a serious security threat.

Data breaches represent another crucial vulnerability. Goading a GPT system into revealing training data or confidential information poses a real threat. For example, if a GPT system has been trained on proprietary or confidential data, there’s a risk that it might unexpectedly expose this information.

Finally, we must consider that these AI-driven systems are, at their core, machines. They lack the moral, ethical, and social filters most humans possess as a byproduct of personal experience and community influence. This fact, paired with the high adaptability of Chat GPT systems, leaves them susceptible to manipulation and misuse. They could be exploited to generate inappropriate or offensive content, a vulnerability we must be acutely aware of.

It’s paramount we carefully manage and use these AI systems, taking into account these vulnerabilities. With the right precautions in place, we can leverage the capabilities of these systems while mitigating the associated risks.

Exploiting Chat GPT for Cyber Attacks

As we delve deeper into the implications, let’s consider how Chat GPT might be exploited for cyber attacks by malicious actors. It’s an uncharted territory, but it’s crucial that we’re aware and prepared.

Often, the primary intent behind cyber attacks is to extract valuable information. Chat GPT, if exploited, may unwittingly aid information theft. Here, the AI’s impressive mimicry capabilities might be turned aganst us. It may be used to create authentic sounding phishing messages, or to trick users into revealing sensitive information. This deceptive technique, also known as “AI Phishing” or “vishing”, stands as an emerging concern in cybersecurity.

Furthermore, a worrying component hides in the AI’s ability to mimic personalities. This uniquely dangerous breed of identity theft could lead to unauthorized access to personal or company-wide information. Imagine a hacker training an AI to simulate your writing style, and then using it to send emails that seem from you. It’s a chilling thought.

Now let’s take a moment to further analyze the kind of security breach that can occur due to these vulnerabilities. In this context, consider the following data:

| Phishing/E-mail Scams | Data Breaches | Identity Theft | |

|---|---|---|---|

| Percentage Increase Year on Year | 15% | 21% | 19% |

It’s clear from the table that the risk of cyber attacks exploiting Chat GPT is real and increasing.

Moreover, the biases in training data can be misused to spread misinformation. Malevolent AI can spin tales that spread confusion, creating a divide among people and disrupting harmony. Cleverly crafted, these false narratives can influence public opinion, enabling manipulation on a massive scale.

Although existing protective measures like two-factor authentication, IP tracking, and encryption methods add a layer of security, they aren’t fully immune to AI-driven attacks. We need to continually innovate and improve our defensive strategies to secure our digital spaces against AI misuse.

Conclusion

It’s clear that hacking Chat GPT systems poses a significant threat to our digital security. With AI phishing and identity theft on the rise, we’re facing new challenges in the cyber world. The misuse of training data biases can also lead to the spread of misinformation. But it’s not all doom and gloom. By staying vigilant and continuously innovating our defensive strategies, we can effectively combat these AI-driven attacks. The future of cybersecurity is in our hands, and it’s up to us to shape it. Let’s keep pushing the boundaries, exploring new frontiers, and ensuring our digital world remains a safe space for all.

Frequently Asked Questions

What is the main focus of the article?

The article investigates potential risks and exploitations concerning AI chat systems in cyber attacks, which include ‘AI phishing,’ identity impersonation, and the misuse of biased training data.

What does AI phishing or vishing mean?

AI phishing, or “vishing,” refers to a method where a hacker uses an AI’s mimicry capabilities to trick users into revealing sensitive information.

What does the article mention about identity theft?

The article highlights the threat of AI-driven identity theft, where a hacker uses AI to impersonate an individual, gaining unauthorized access to personal or sensitive data.

What is the concern regarding biases in training data?

The article elaborates on the potential misuse of biases in training data to spread misinformation and manipulate public opinion.

What is the article’s call for defensive strategies?

The article emphasizes the need for ongoing innovation in defensive strategies in response to the evolving nature of AI-powered attacks, highlighting the insufficiency of current security measures.