Unmasking AI: Is ChatGPT Accused of Plagiarism Ethical?

In the AI world, there’s a hot question buzzing around – does ChatGPT plagiarize? Now, I’ve been around the block a few times, and I’ve seen AI technologies evolve. But this question is not as simple as it seems.

ChatGPT, developed by OpenAI, is an AI language model that’s been making waves for its ability to generate human-like text. It’s impressive, sure. But does it cross the line into plagiarism? That’s what we’re here to find out.

This question isn’t just about ChatGPT. It’s about how we define plagiarism in the age of AI. It’s about understanding the nuances of AI language generation. So, let’s dive in, shall we?

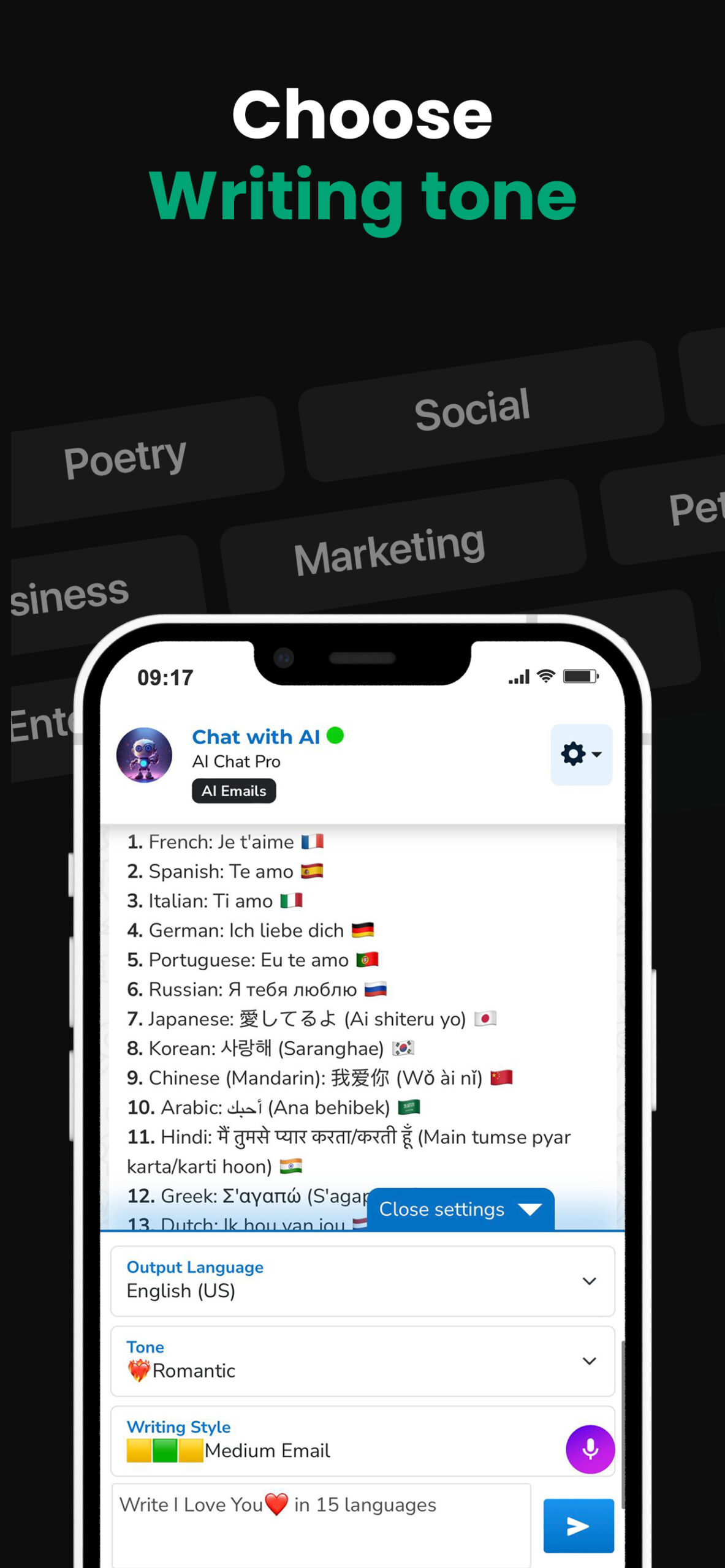

PowerBrain AI Chat App powered by ChatGPT & GPT-4

Download iOS: AI Chat

Download Android: AI Chat

Read more on our post about ChatGPT Apps & AI Chat App

Key Takeaways

- AI language models like ChatGPT, developed by OpenAI, do not plagiarize in the conventional sense. They generate texts based on patterns learned during their training phase and don’t copy texts verbatim from their training sets.

- The models create compositions mirroring human-like text. Instances may arise where generated text perfectly matches with the source data, creating an illusion of plagiarism.

- ChatGPT does not invent but discovers patterns from extensive internet datasets. It utilizes the transformer neural networks technique to simulate human-like text. Its responses are based on learned text structures, vocabularies, and syntaxes.

- In AI language generation, any close resemblance to the source material is an inadvertent outcome, not unethical copying. It doesn’t remember or store specific documents, books, or sources.

- While ChatGPT doesn’t plagiarize in a traditional context, ethical implications in AI language generation remain crucial. AI systems should respect ethical principles and values, including privacy rights and transparency about data usage.

- Detecting plagiarism in AI-generated content like ChatGPT is an intricate task. It’s critical to discern if the AI is simply regurgitating words from its training dataset or creating original content based on the learned material.

- The issue of plagiarism detection in ChatGPT merges into a larger debate about ethics, usage rights, and transparency in AI operations. As we continue to explore this field, it’s essential to bridge the gap between technical innovation and ethical practices.

- https://powerbrainai.com/how-does-turnitin-detect-chatgpt/

- https://powerbrainai.com/the-model-gpt-4-does-not-exist/

Understanding Plagiarism in AI

Diving into the heart of this intelligent debate, it’s vital to grasp what we mean by plagiarism in the realm of artificial intelligence. The traditional understanding of plagiarism is attributed to a human context, where an individual misleadingly uses someone else’s work as their own. It’s a straightforward concept when applied to human-authored content but gets increasingly complex when dealing with AI like ChatGPT.

AI language models like ChatGPT are trained on vast datasets, often encompassing various online texts available publicly. They don’t have access to specific documents or proprietary databases. These models generate text based on patterns they’ve learned during their training phase rather than retrieving information from a particular source. They don’t create content by copying text verbatim from their training sets. Instead, they generate new compositions designed to mirror human-like text.

That being said, instances may arise where the generated text seems perfectly matched with the source data, creating an illusion of plagiarism. Hence, it manifests how intricate it is to pin down ‘plagiarism’ within the context of AI. Is it really plagiarism, or is it just the AI model successfully doing what it was trained to do?

To tackle this loaded question, we need to step back and reconsider our framework for understanding plagiarism in the AI context. It demands a shift from a black-and-white view of ‘copying’ to appreciating the nuances of AI language generation.

Let’s delve deeper into this fascinating debate, unpacking the complexities that make it more than just a binary issue. As we do this, we’ll begin to realize the need to reinvent our understanding of both artificial intelligence and plagiarism.

How ChatGPT Works

Let’s dive into the inner workings of ChatGPT, keenly breaking down how OpenAI’s language model generates its responses.

ChatGPT, in its operation, isn’t invented. It discovers patterns from an expansive dataset compiled from the internet. From books to articles to websites, billions of words have been parsed by ChatGPT. Through a machine learning technique called transformer neural networks, it simulates remarkably human-like text.

How does it do it? The answer, as complex as it might seem, is right in its name. GPT stands for Generative Pretrained Transformer. And that’s mainly what ChatGPT does; it generates text based on the pre-training it’s received.

Transforming this into a relatable context is like giving a calculator a set of equations, solving which it learns how to do math. The calculator doesn’t create the solution from scratch; it follows learned methods to get the answer. Similarly, ChatGPT doesn’t spin its responses right out of nothing. It constructs answers based on learned text structures, vocabularies, and syntaxes.

In the ChatGPT process, duplication or too close a resemblance to the source material is not about unethical copying but rather an inadvertent outcome. It doesn’t remember or store specific documents, books, or sources. Instead, it identifies common themes, style elements, and phrase structures and then uses this learned framework to generate new text when prompted.

As we delve deeper into our discussion on whether ChatGPT plagiarizes, it is essential to keep these facts in view. The process of language generation in AI is complex, and it’s outside the bounds of traditional ideas of plagiarism as we know them.

Ethical Implications

Although ChatGPT doesn’t plagiarize, the ongoing debate of ethical implications in AI language generation is far from over. This issue is complex and is more than just a concern about simple imitation or copying. We need to delve into the ethical side of AI technology.

ChatGPT learns patterns and themes from a colossal dataset to generate text. Unintentionally, this might include similarities with the source material. However, it’s essential to note that plagiarism is an intentional representation of someone else’s words or ideas as if they were your own. ChatGPT, on the other hand, merely identifies common themes to generate new text. It does not store any specific documents.

Does this absolve AI from the ethical concerns linked to plagiarism? Not completely. The AI industry and users must understand that ethics aren’t binary. It’s not just about “plagiarizing or not” but rather about implementing responsible AI practices.

AI systems should be designed in a way that respects ethical principles and values. This includes being transparent about how data is used and ensuring respect for privacy rights. Providing due credit and avoiding misrepresentation is also a critical aspect of AI ethics. Whether it’s by highlighting that a text was AI-generated or by indicating potential resemblance with existing data, we need to work towards accountability and responsibility in AI text generation.

Detecting Plagiarism in ChatGPT

As we delve deeper into understanding the operations of ChatGPT, we must pause to explore plagiarism detection. In traditional text generation, detecting plagiarism is a straightforward process. There are numerous tools available online that can scan a piece of work and compare it to a vast database of existing content. However, detecting plagiarism in AI-generated content, particularly ChatGPT becomes a more intricate task.

The key question is, does the AI merely regurgitate words from its training dataset, or does it create original content based on the learned material? In the case of ChatGPT, it’s more the latter. The machine learning model doesn’t specifically copy and paste phrases or sentences. Instead, it’s producing original content based on patterns and concepts it derived from its extensive learning dataset.

One more factor getting in the way of traditional plagiarism detection is the moment-to-moment operation of GPT models. Specifically, they don’t retain memory from prior inputs beyond a certain limit. This forgetfulness means that prolonged, identical outputs are purely coincidental, not the result of intentional copying.

Still, the ethics of AI-generated content come into question in reference to its relationship with the original source material. If the output closely resembles that source material, even if unintentionally, issues of attribution and credibility arise. So, the problem of plagiarism detection in ChatGPT gets submerged into a larger debate about ethics, usage rights, and transparency in AI operations.

Yet, let’s not forget the endless possibilities these AI tools offer. They provide opportunities for efficient content creation, language translation, customer service, and endless other applications. As we continue to explore and understand this field, it’s crucial to bridge the gap between technical innovation and ethical practices. These are not mutually exclusive aspects but rather complementary facets that will ensure responsibility and transparency in AI.

Conclusion

So, does ChatGPT plagiarize? It’s a complex question with no easy answer. From my exploration, it’s clear ChatGPT generates original content, not directly copying from its dataset. Yet, the unintentional similarities to source material can’t be ignored. They pose ethical questions about attribution and credibility. Remember, while AI tools like ChatGPT hold immense potential for various applications, we mustn’t lose sight of the ethical considerations. As we continue to innovate, let’s also strive for accountability and transparency in our AI practices. That’s the balanced approach we need to adopt.

What is the article about?

The article discusses the difficulty of detecting plagiarism in AI-generated content, with a specific focus on the ChatGPT tool. It explains how these complications arise from the original content created by ChatGPT and discusses the ethical concerns this issue raises.

How does ChatGPT generate its content?

ChatGPT produces content based on learned patterns from its training dataset. It is not directly copied from this data, which introduces a unique challenge for plagiarism detection in AI outputs.

What are the ethical concerns mentioned in the article?

The article points to the ethical dilemma tied to GPT models’ forgetfulness and unintentional resemblance to source materials. It implies concerns about the correct attribution of source material and maintaining credibility in artificial intelligence outputs.

Is ChatGPT beneficial despite these challenges?

Yes, the article recognizes the vast potential of the ChatGPT tool for different applications. However, it underscores the need to walk a fine line between embracing innovative technology and embracing ethical responsibilities to achieve transparency and accountability in artificial intelligence proceedings.