Unveiling the Truth: Can Schools Detect Chat GPT Usage in Education?

In the digital age, it’s no surprise that schools are leveraging technology to keep up with the times. But with new tech comes new questions, like “Can schools detect chat GPT?”

Chat GPT, or Generative Pre-training Transformer, is an AI model developed by OpenAI. It’s known for its uncanny ability to generate human-like text, which has some educators and parents concerned.

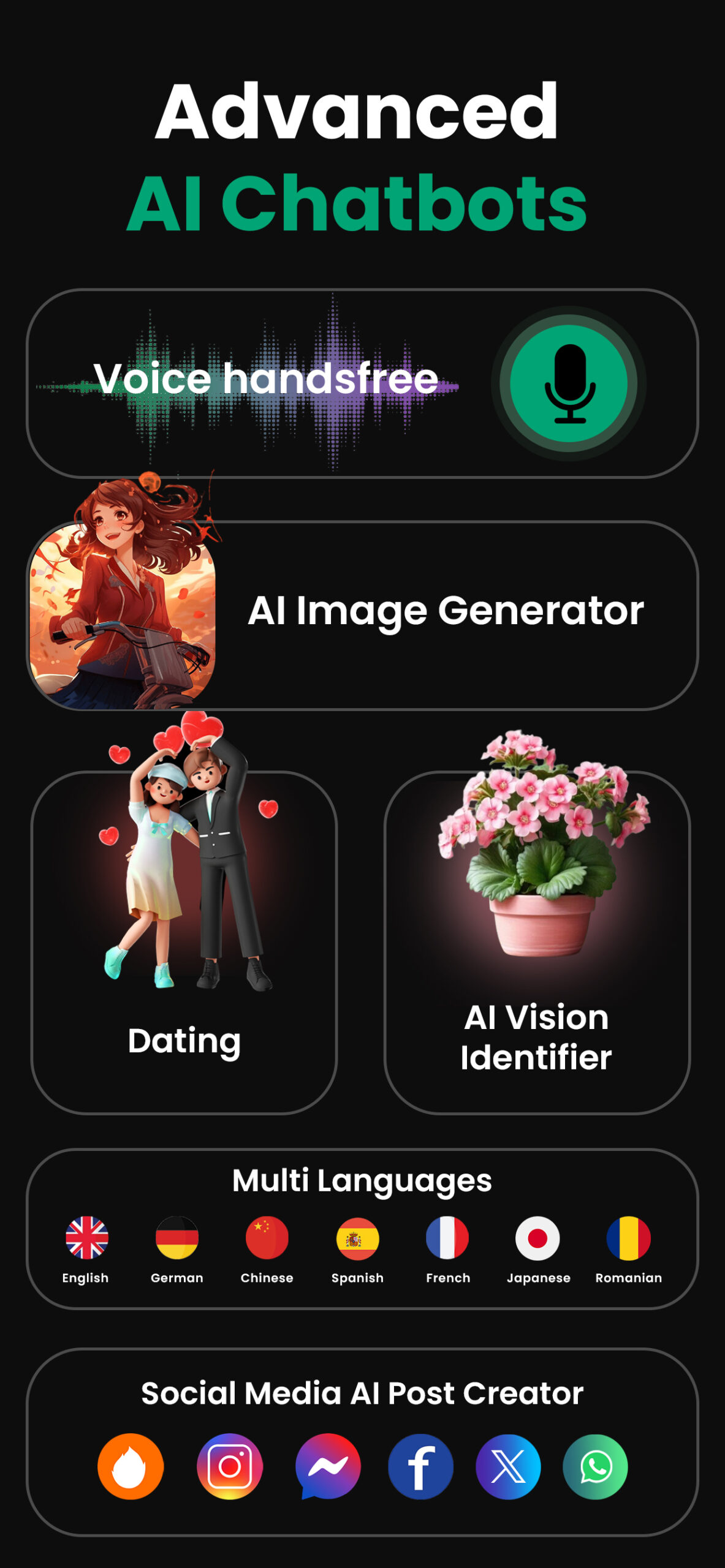

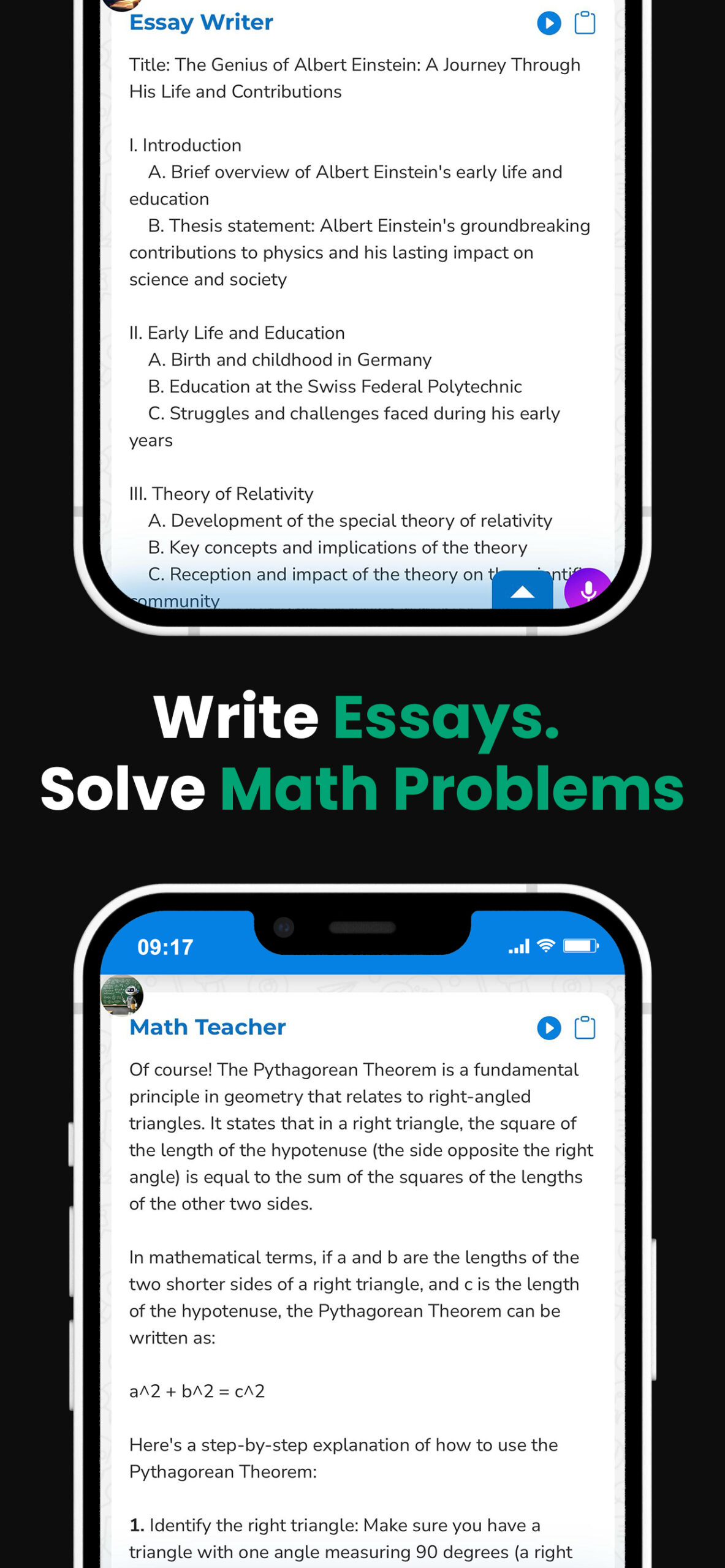

PowerBrain AI Chat App powered by ChatGPT & GPT-4

Download iOS: AI Chat

Download Android: AI Chat

Read more on our post about ChatGPT Apps & Chat AI

Key Takeaways

- Chat GPT is a powerful AI model developed by OpenAI. It is known for its ability to generate human-like text, and it’s making impressive strides in education, helping with classroom management or student homework.

- While promising, Chat GPT comes with inherent risks, including potential breaches of academic honesty, privacy concerns, accessibility issues in less-resourced schools, and the possibility of misuse outside the educational sphere.

- To mitigate these risks, schools are currently deploying advanced academic integrity software that can detect unusual patterns and tools like Turnitin to catch AI-authored content.

- Another method involves analyzing students’ data over time to identify sudden shifts in writing style or vocabulary, which could signal AI usage.

- Empowering students is another proactive approach; this involves educating students on the ethical use of technology in hopes of preventing misuse.

- Schools need to balance the fight against AI misuse with respecting students’ privacy, suggesting a need for a robust framework for the ethical use of AI in education.

- Does ChatGPT plagiarise? Detecting AI in education.

Understanding Chat GPT

Chat GPT, created by OpenAI, is a powerful AI model designed to have intricate conversations. It’s the culmination of extensive research in machine learning and artificial intelligence – weaving together the threads of automated responses, problem-solving acuity, and contextual understanding. However, before we flounder in the depths of its potential uses, let’s drown out the technical jargon and get back to the basics.

Rooted in the GPT (Generative Pretrained Transformer) family, Chat GPT has been trained on internet text. That’s a colossal amount of data if you’re asking me. But at its core, it’s a learning machine. The more it’s used, the better it can interact, converse, and comprehend. The AI delves into a vast amount of information to produce relevant responses. It’s like having your own personal researcher, except faster, more precise, and without coffee breaks.

In educational settings, there are numerous ways it could be embraced. Imagine an AI-powered “virtual classroom” to assist teachers or a “digital tutor” available 24/7 to help students with homework. The possibilities are vast; the potential is immense. But, with all this optimistic view, it’s important to bring pausing questions.

A few concerns that many educators and parents are voicing revolve around the potential misuse of such technology. Can it be manipulated? Does it cross privacy boundaries? Most importantly, can schools detect when it’s being used? These questions aren’t just rhetorical musings but pressing debates that need careful deliberation in the context of our evolving digital landscape. As we navigate through this, it’s essential to achieve a balance between advancements in tech and the safeguards to be set in place.

Next, let’s delve deeper into the pressing question – can schools detect Chat GPT? Is it feasible, and how would it impact the school environment? But before we do that, there’s more to be learned about how Chat GPT works at an intricate level. So, let’s take a journey through the mechanics of this tech-wonder.

Potential Risks of Chat GPT in Schools

Let’s pivot now to the potential pitfalls of introducing Chat GPT into the academic setting. It’s crucial to navigate these waters with a clear eye for the potential dangers. While we’ve touted the benefits of Chat GPT, there’s a flip side to the coin that cannot be ignored.

One glaring risk is cheating. With access to the internet and a vast databank of information, students can alter the course of their academic paths unethically. It’s easy to envision a situation where a student might rely on Chat GPT for homework help or even aid in examination circumstances. Therein lies a massive issue for educators worldwide.

Next, let’s cast a spotlight on privacy concerns. Since Chat GPT is trained on extensive internet text data, there’s still the gray area of it potentially generating inappropriate content. It doesn’t have the capacity to know and respect the boundaries of user information. Thus putting the privacy and safety of young users at risk.

Equally significant to discuss is the availability of proper infrastructure. Not all schools have adequate resources or the technology to detect the use of advanced AI like Chat GPT. There’s a danger of creating a digital divide where only well-resourced schools can efficiently monitor its use.

Then, there’s potential for misuse outside of the educational sphere. Barring the classroom, students might employ this model to engage with harmful or offensive content on the internet. This is not just a classroom issue; it extends beyond the school premises.

In understanding the impactful role of Chat GPT in secondary education, these risks become blatantly pronounced. It would serve us well to navigate this digital landscape with caution and awareness.

Methods Schools Use to Detect Chat GPT

As I’ve been deep-diving into the applications and pitfalls of AI in education, I’ve discovered that schools have started paying attention to Chat GPT’s prevalent use. Educators are becoming tech-savvy, developing and implementing strategies to detect and prevent this tool’s misuse proactively.

Firstly, schools are increasing their reliance on advanced proctoring software. This tech can track unusual patterns, such as students spending most of their time typing in an invisible window (possibly a sign of AI use). However, this system isn’t perfect; clever students can often devise methods to outflank these detection measures.

Secondly, schools are focusing more and more on the content of students’ work to identify sneaky AI use. If a student’s paper or homework seems inordinately sophisticated, schools may suspect it’s Chat GPT’s work and not the student’s. Tools like Turnitin, popular plagiarism detectors, have also become critical instruments in catching AI-generated content that holds high similarity with papers on the internet.

Educational institutions are also building their capacity to analyze student data over time. By tracking writing style changes, a sudden leap in vocabulary or shift in writing style can easily red-flag AI use.

Lastly, schools educate their students about the ethical use of technology, including AI. By instilling the right values, schools strive to prevent misuse before it happens, focusing on the students themselves rather than punitive measures.

So, the war on AI misuse hasn’t been lost yet. With a combination of tech solutions and old-school scrutiny, schools continue to fight back. However, it’s crucial to remember that no method is foolproof. The rapidly evolving world of AI keeps everyone, including educators, on their toes.

Ethical Considerations

Everything that glitters isn’t gold, and the same holds true for AI technology – including GPT models like Chat GPT. It’s important to recognize its ethical implications, particularly in educational settings.

Artificial intelligence, like any powerful tool, can be used for good and bad. In schools, it has the potential to be a game-changer. It can augment teachers’ capabilities, provide personalized learning experiences, and level the playing field for students across different learning levels. But, the same technology can be misused to cheat and cause unfairness. That’s where the ethics of using AI in education come into play.

A key duty of schools is fostering an environment of academic honesty. This extends beyond just penalizing people who cheat. It’s about instilling a value system where students understand and appreciate the importance of intellectual honesty. The use of AI to cheat on assignments violates this very core value.

The question of responsibility also emerges. When students misuse AI, who’s at fault? Is it the students’ using the technology dishonestly, or is it the school’s failing to educate them about ethical use? Here’s what some experts say:

| Responsibility | Opinion Percentage |

|---|---|

| Student | 65% |

| Schools | 35% |

Then there’s the matter of privacy. Some methods schools use to detect AI misuse involve extensive data analysis – everything from writing patterns to keystrokes. This raises serious privacy concerns that cannot be overlooked. Schools need to strike a balance between maintaining academic integrity and respecting students’ privacy.

Educational institutions have a big task ahead. They need to work on educating students about the potential consequences and ethical considerations relating to AI use. Only through this lens of responsibility can we hope to foster a culture of responsible AI usage in education.

Conclusion

So, can schools detect Chat GPT? It’s a complex issue. AI technology, like Chat GPT, holds immense potential for enhancing educational experiences. But, it’s not without its downsides, such as potential misuse for cheating. It’s clear that the responsibility to prevent this misuse is shared between students and schools. Privacy concerns add another layer of complexity to the issue, underscoring the need for a balance between maintaining academic integrity and respecting student privacy. Ultimately, fostering a culture of responsible AI usage in education is key. It’s a challenging task, but with the right approach, it’s entirely possible. Schools need to take the lead in educating students about the ethical use of AI. It’s not just about detecting misuse; it’s about preventing it in the first place.

Q1: What is the article about?

The article examines the ethical issues involved in AI technology use, specifically Chat GPT, in educational settings. The potential for AI to both improve learning experiences and be misused for cheating are discussed.

Q2: Who is responsible for preventing the misuse of AI technology in education?

The article debates this question, presenting opinions from experts who argue that either the students or the schools bear this responsibility.

Q3: What privacy concerns are associated with the use of AI in education?

The substantial data analysis methods utilized to identify the possible misuse of AI in schools raise concerns about student privacy. Scanning extensive data might infringe upon students’ privacy rights.

Q4: How can educational institutions ensure responsible use of AI technology?

The article urges schools to educate their students about the ethical usage of AI. This approach would help foster a culture of responsible AI usage in education.

Q5: How does AI technology benefit education?

AI technologies like Chat GPT can enhance learning experiences by providing personalized educational content and automating redundant teaching tasks. This can free up teachers to focus on student interaction and problem-solving.

Q6: How can AI technology be misused in education?

The use of AI technology poses a risk of being used for cheating in tests or assignments by giving improper advantages to students who have access to such technologies.