Maximizing the Benefits of Overclocked GPT: A Guide to Safe Implementation

If you’re like me, always on the hunt for the next big thing in tech, you’ve likely heard the buzz about overclocked GPT. It’s a term that’s been making waves in the tech world, and for good reason. This isn’t just another tech fad; overclocked GPT is revolutionizing how we approach computing.

Overclocked GPT, or Generative Pre-trained Transformer, is a powerful tool that’s changing the game. It’s all about boosting the performance of your machine learning models, pushing them to their limits, and achieving results that were once thought impossible. So, let’s dive in and explore the exciting world of overclocked GPT.

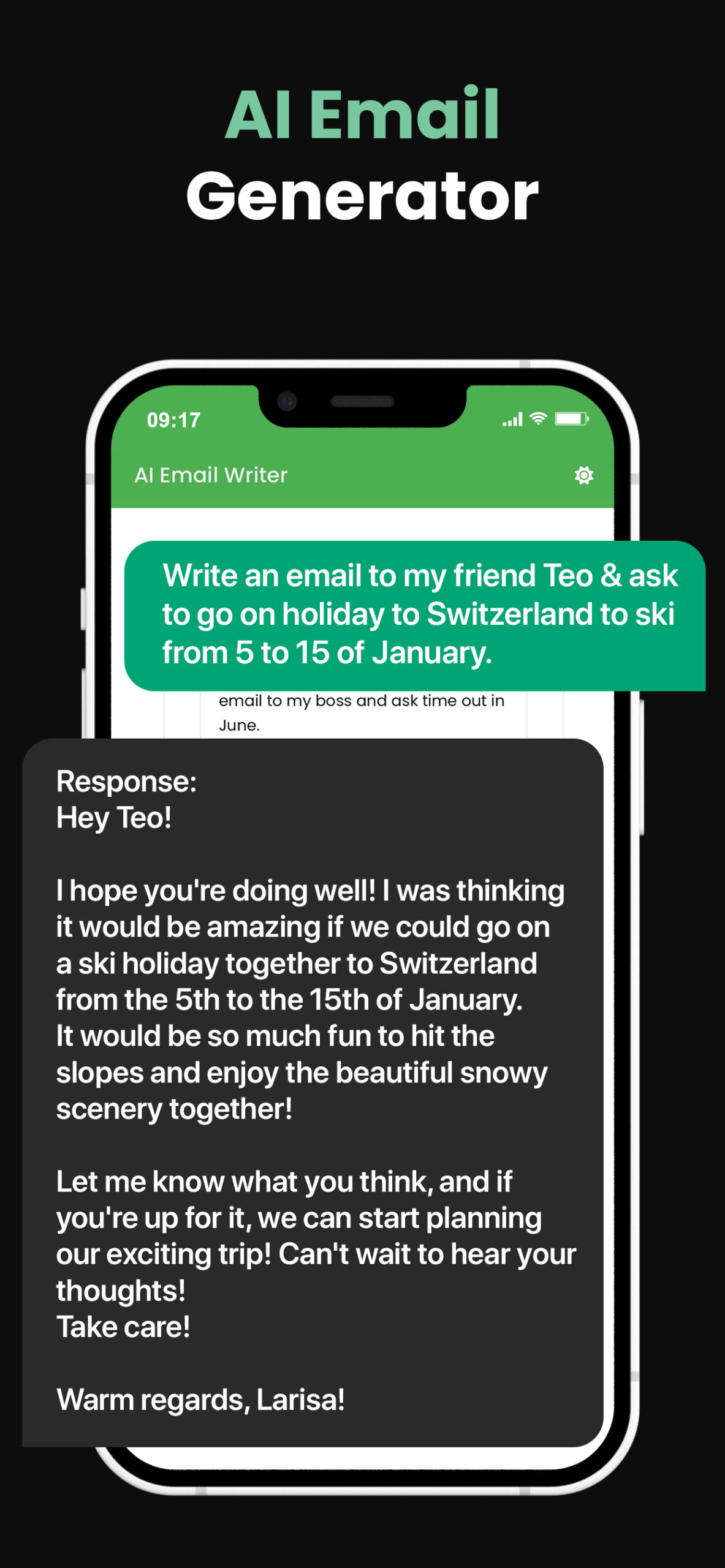

PowerBrain AI Chat App powered by ChatGPT & GPT-4

Download iOS: AI Chat

Download Android: AI Chat

Read more on our post about ChatGPT Apps & Chat AI App

Key Takeaways

- Overclocked GPT, or Generative Pre-trained Transformer, represents a breakthrough in enhancing the performance of machine learning models beyond their typical performance limits.

- The process of overclocking a GPT model involves providing the model with a vast quantity of high-quality, diverse training data and refining the learning algorithms.

- An overclocked GPT model is able to handle large datasets while processing information faster and generating more accurate and refined outputs due to iterative learning.

- Overclocked GPT models offer potential benefits such as significant improvements in speed and efficiency, refined accuracy, massive data handling abilities, providing a competitive advantage in AI applications, and inherent scalability.

- Alongside the benefits, inherent risks and challenges are associated with overclocking GPT models, such as increased power consumption leading to overheating, increased error rates due to fast-paced data processing, and security and privacy risks due to high-speed data movement.

- Safe and effective implementation of overclocked GPT models requires effective model training, optimal cooling solutions, advanced security measures, diligent assessment of power consumption, and the support of a team with technical expertise in GPT models.

Understanding Overclocked GPT

Diving deeper into the specifics, overclocked GPT is a buzzword that has been building momentum in the tech industry. But what exactly is it? Put overclocked GPT is where you power up the GPT model beyond its typical performance. It’s not just about speed, though that’s definitely a part of it. The process also entails maximizing the model’s efficiency, effectiveness, and overall performance.

So, why is this significant? Overclocking the GPT model amplifies the capabilities of machine learning applications. Models work faster, process more complex datasets, and deliver more accurate and refined outputs. AI systems become more intelligent, responsive, and idea-driven. They’re able to generate text that’s not only syntactically correct but also semantically meaningful.

Let’s look at some numbers. Assume we’re overclocking a hypothetical GPT model for a specific task – let’s say, generating text in real time. Traditionally, a GPT model might be able to process about 200 words per minute. By overclocking the GPT model, you could boost this figure significantly.

| GPT Model | Typical Performance | Overclocked Performance |

|---|---|---|

| Traditional GPT | 200 words/min | N/A |

| Overclocked GPT | N/A | Estimated 250-300 words/min |

These figures aren’t exact; they’re purely illustrative. Actual numbers will depend on the specifics of the overclocking process and the characteristics of the GPT model in question.

In short, overclocked GPT is more than just a concept. It’s an influential tool that’s transforming technology as we know it. With overclocked GPT, the boundaries and limits on what machine learning models can achieve are being redrawn. Greater things are on the horizon in the world of artificial intelligence. It’s fair to say that the future is overclocked. Read more: ChatGPT not working, Chat GPT without restrictions & Bypass GPT.

How Does Overclocked GPT Work?

Just like overclocking a computer processor, overclocking a GPT model boosts its performance. But how does this process work? Let’s delve into the nitty-gritty.

First off, it’s not about fiddling with physical knobs or tweaking settings in a traditional sense. The overclocking process is more about data manipulation and training. I’ll take you through the general process.

Training Data and Algorithms

An overclocked GPT model, like its standard counterpart, is driven by training data and learning algorithms. However, the quantity and quality of this data are amplified. To overclock, you feed the GPT model a massive amount of high-quality, diverse data. This isn’t just any data. The cherry-picked information spans numerous domains, ensuring broad knowledge coverage and diversity.

Handling Large Datasets

Due to the *large influx of data*, the GPT model learns to handle and process vast datasets. This is critical in handling complex computations or tasks quickly and efficiently. Overclocking, therefore, improvises the model’s ability to process large datasets.

Iterative Learning

Learning doesn’t stop there. The model distinguishes patterns, learns, unlearns, and relearns in an iterative manner. Each iteration improves the model’s ability to recognize text or data patterns and predict the following sequences. Over time, the model becomes smarter, quicker, and more accurate.

Enhanced Efficiency and Accuracy

The magic of overclocked GPT doesn’t end at speed. Their increased exposure to diverse data enables these models to generate more accurate and refined outputs, making AI systems smarter.

Maintaining the balance between processing speed, efficiency, and the accuracy of results is key in overclocked GPT. This delicate equilibrium ensures they don’t sacrifice accuracy for speed or vice versa.

Without stepping into the world of conclusions, let’s say overclocking GPT models is like giving them a dose of superintelligence coupled with super-speed. It’s truly a tremendous breakthrough in artificial intelligence and machine learning.

Benefits of Overclocking GPT Models

Let’s delve into the key benefits of overclocking GPT models.

Speed and Efficiency

Drawing from the overclocking computer concept, overclocked GPT models excel at swiftly processing vast amounts of data. Businesses dealing with large-scale data sets will find it’s a game-changer. It provides a significant speed boost in training algorithms, processing information, and generating outputs. Where standard GPT models might take hours, an overclocked model might do the job in a fraction of that time.

Refined Accuracy

Overclocked GPT models are not just about speed; they’re also about enhancing accuracy. Iterative learning enables the system to recognize patterns and predict sequences with a higher degree of precision. Over time, it makes the AI model smarter and more capable of producing refined, accurate outputs.

Massive Data Handling

The overclocking technique supercharges a GPT model’s ability to handle massive datasets. Today’s digital world generates unthinkable amounts of data daily. Overclocked GPT models make it possible to effectively analyze, study, and promptly gain valuable insights from this data deluge.

Competitive Advantage

Seeking an edge in the competitive tech industry? Overclocked GPT models could be the answer. Since they process information rapidly and accurately, these models can give companies a significant head start in machine learning and AI applications.

Scalability

In a tech world that’s always evolving, scalability is crucial. Overclocked GPT models are inherently scalable since they are designed to handle large datasets without compromising on processing speed or accuracy.

The delicate balance between processing speed, efficiency, and accuracy is crucial in overclocked GPT models. They offer a significant breakthrough in artificial intelligence and machine learning by combining superintelligence with super speed. Boosting performance and enhancing capabilities are the prime objectives here. But, like any technology, overclocking GPT requires careful handling to ensure optimal performance. After all, power without control is nothing.

Risks and Challenges of Overclocking GPT

While the benefits of overclocking GPT models can be substantial, there are inherent risks and challenges to consider. It’s essential to approach this technology with a comprehensive understanding of both its promise and pitfalls.

Overclocking can lead to higher power consumption, which, in turn, may cause an increase in heat production. This additional heat can potentially strain the system, causing hardware damage or instability. Keeping overheating at bay becomes a significant challenge since it requires enhanced cooling systems and power supply units, increasing operational costs.

As a potent tool in AI advancement, overclocked GPT models can manage enormous data sets and accelerate the speed of processing. However, this swift processing speed comes at the expense of potentially increased error rates. While these models are designed to learn iteratively and refine their output, fast-paced data processing may sometimes compromise quality and accuracy.

An overclocked GPT model’s ability to handle copious amounts of data efficiently potentially exposes a business to security and privacy risks. As data moves at incredibly high speeds, protecting that data becomes increasingly challenging. Breaches could lead to significant damage in the form of financial losses or damage to the company’s reputation.

The challenge of balancing speed, efficiency, and precision is central to AI and machine learning. Overclocking GPT models amplifies this challenge. Maintaining the delicate balance between these key aspects becomes an uphill task as computational power increases.

Bear in mind that mastering this technology also requires vast technical expertise. Skilled technicians who understand the intricacies of GPT models are not always readily available, and the hiring or training cost often falls on the business.

| Risks and Challenges | Description |

|---|---|

| Overheating | Increased heat from higher power consumption could damage the hardware. |

| Increased Error Rates | Fast-paced data processing can lead to quality and accuracy drop-offs. |

| Security & Privacy | High-speed data movement poses an increased risk for breaches. |

| Balancing Speed, Efficiency, Precision | .Increasing speed & efficiency can compromise precision. |

| Resources | Requires substantial technical expertise and can add to operational costs. |

Implementing Overclocked GPT Safely

Transitioning to overclocked GPT models isn’t something to take lightly. Due to the potential for higher power consumption, excess heat, increased error rates, and privacy concerns, implementing these models safely requires a well-crafted approach. It’s practically an art, and I’m here to share selected strategies to help you conquer the beast.

Firstly, training your model effectively is key to mitigating error rates. Just as musicians need to fine-tune their instruments for optimal sound, data scientists need to train their GPT models for the best performance. Specialized software exists that allows you to adjust the clock speed of your model, helping fine-tune the performance.

Next up, optimal cooling solutions. Overclocked GPT models churn out more heat than your average set-up. Therefore, ensuring that you have adequate cooling systems in place is vital. This could mean investing in enhanced cooling solutions or even looking at external options.

Advanced security measures become even more important with overclocked GPT. The very nature of these models means data is processed and transferred incredibly rapidly. This necessitates additional measures to ensure data security. Employing strong encryption methods and firewalls and adhering to stringent security protocols can go a long way in protecting sensitive information.

Assessing and minimizing power consumption is a further essential step. Monitoring tools can be utilized to provide real-time information about your system’s power usage, assisting in identifying and resolving potential issues.

Lastly, you shouldn’t try to navigate these waters alone. Retaining a team of GPT experts provides a valuable knowledge base and safety net. Their wealth of experience can prove vital in ensuring your overclocked GPT implementation is successful and safe. To sum up, it’s a case of slowing down to speed up, weighing up the potential risks before jumping headfirst into the world of overclocked GPT models.

Conclusion

Let’s not overlook the significance of safe implementation when it comes to overclocked GPT models. It’s crucial to tackle concerns like higher power consumption, excess heat, and increased error rates. Don’t forget about the privacy risks, too. Remember, the key lies in effective model training, optimal cooling solutions, advanced security measures, and careful power monitoring. You’d do well to tap into the knowledge of GPT specialists. So before diving into overclocked GPT models, ensure you’ve weighed all potential risks. Always approach with caution and thorough consideration. It’s not just about harnessing the power of these models but doing so in a safe and sustainable way.

Frequently Asked Questions

What are the concerns with implementing overclocked GPT models?

The main concerns are higher power consumption, excess heat, increased error rates, and privacy risks. If not managed properly, these can lead to adverse situations and compromise system performance.

How can I safely implement overclocked GPT models?

Safe implementation requires several strategies. These include effective model training, optimal cooling solutions, advanced security measures, and minimizing power consumption. It’s also encouraged to seek the expertise of GPT specialists.

Why do I need to exercise caution before transitioning to overclocked GPT models?

These models come with potential risks. Considering these risks and implementing safety strategies before transitioning to overclocked GPT models is crucial to prevent possible system failures or performance compromise.