Unveiling the Truth: Why is Chat GPT Slow & How To Boost Its Speed?

Ever wondered why your chat GPT seems to be dragging its virtual feet? I’ve been there too. It can be frustrating when you’re trying to get things done, and your chatbot is moving slower than a snail on vacation.

A lot goes on behind the scenes to affect the speed of your chat GPT. It’s not as simple as it might seem. Numerous factors, from the complexity of the model to the server’s capacity, can influence the speed.

In this article, we’ll delve into why your chat GPT might be slow. We’ll unpack the technical aspects, shedding light on what’s happening behind that loading icon. So, if you’re tired of watching your digital hourglass, stick around; we’re about to speed things up.

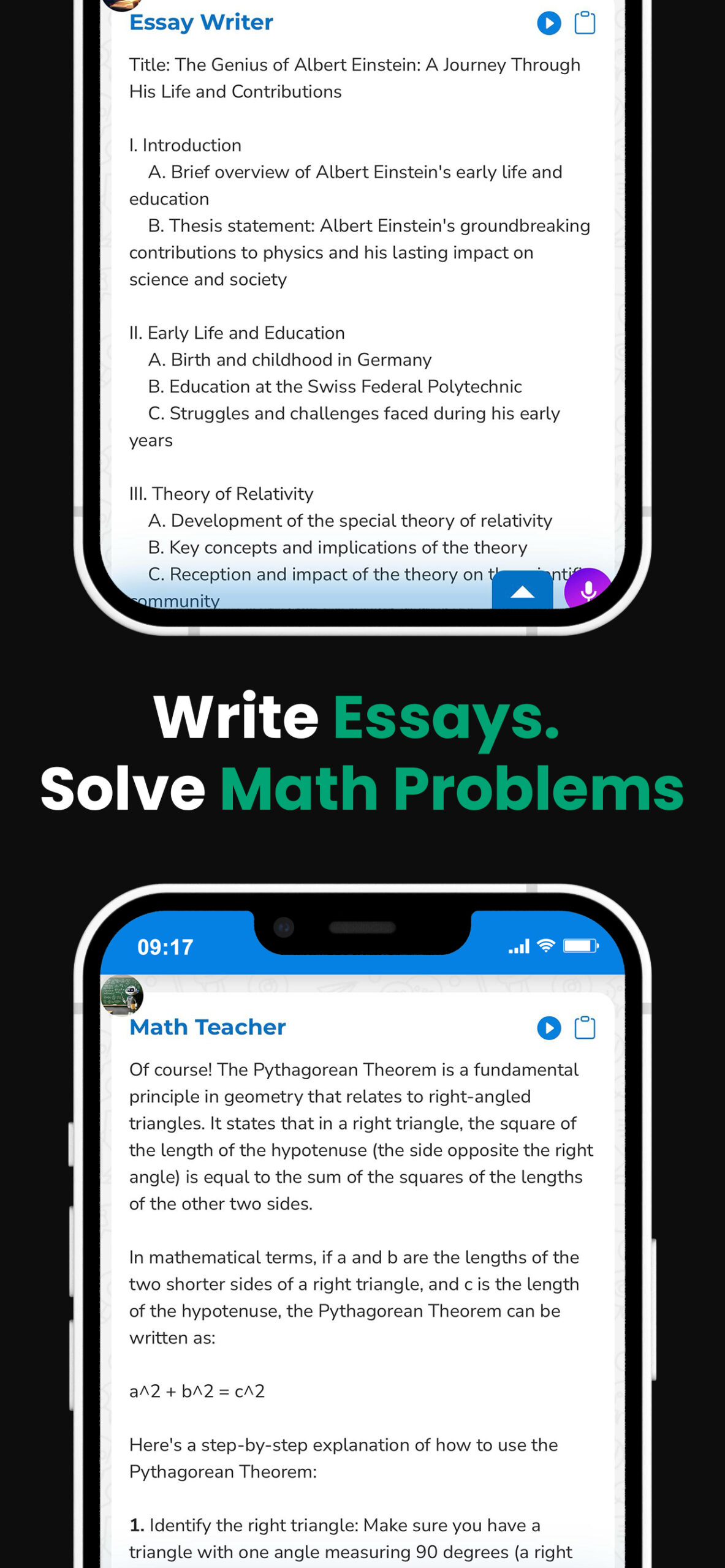

PowerBrain AI Chat App powered by ChatGPT & GPT-4

Download iOS: AI Chat GPT

Download Android: AI Chat GPT

Read more on our post about ChatGPT Apps & AI Chat

Key Takeaways

- The speed of Chat GPT is primarily affected by model complexity and server capacity, contributing to slow processing times.

- Chat GPT’s complexity stems from its capability to mimic human language and cognition. This requires decoding and understanding words in context, necessitating a highly sophisticated AI model and computational power.

- Server capacity refers to a server’s processing power at a given time. Given its complexity, Chat GPT requires high server power for fast processing times.

- Improving Chat GPT speed is a multifaceted process involving an efficient increase in the server’s ability to handle multiple user requests, optimize computational resources, and carefully balance mode complexity.

- While hardware improvement elements can boost server performance, considerations such as cost-effectiveness, energy efficiency, and heat management must be concurrently addressed.

- Modifications at the model level, such as smarter task management and task-specific optimization, can alleviate server load and accelerate processing. Reducing model complexity without compromising output quality can also be a beneficial strategy.

Understanding Chat GPT Speed

Chat GPT can be excruciatingly slow despite its cutting-edge technology. The reasons are complex, and we’ll break them down in terms we can all understand.

Facing the Complexity Challenge: Chat GPT is an advanced model driven by artificial intelligence (AI). It’s not merely translating words or copying text. Instead, it strives to understand content and generate meaningful responses. We’re talking hundreds of mathematical equations running discreetly in the background, allowing it to decode your words, contextualize the statement, and create a human-like response. This all adds up to heavy computational work, thus slowing the process down.

Server Capacity Matters: Capacity is another reason why chat GPT can be slow. It works on cloud servers, where multiple instances often run concurrently. When demand exceeds capacity, the servers get overloaded, leading to a decrease in processing speed. To give you an idea of the effect of server capacity on GPT speed, we can refer to the following data –

| Server Capacity | Average Processing Time |

|---|---|

| High | Fast |

| Medium | Moderate |

| Low | Slow |

The complexity of the model and server demands significantly impact the chat GPT speed. However, there are ways around these obstacles, and I assure you it’s possible to experience faster chat GPT. In the next sections, we’ll explore some potential solutions and optimizations. Bear in mind it’s a constant process of tweaking and learning, and Rome wasn’t built in a day. Patience and understanding are key when working in the frontier of AI technology.

Factors Affecting Chat GPT Performance

One crucial element slowing down our chat GPT is the complexity of the artificial intelligence (AI) model. This isn’t just any AI Model; it’s one designed to mimic human language and cognition with utmost sophistication. It can quickly produce contextually relevant, high-quality responses, but with great complexity comes increased computational demands.

Word decoding alone is an intensive process. Each word generated must feed back into the model to inform the creation of the next word. This recursion makes chat GPT heavily dependent on high processing power—in short, the stronger your server, the better your chatbot will perform.

In the next stride, we see that server capacity plays a major role. Imagine the server dealing with thousands, if not millions, of chat queries. That’s a lot of weight to carry. Overloading the server can drastically decrease the speed at which your chat GPT operates. Treat your servers like thoroughbred racehorses – optimally fed, routinely maintained, and never overworked.

But it’s not all doom and gloom here. Mankind didn’t reach the moon by shying away from complexity, and AI is no different. By analyzing these factors, we can arrive at valuable solutions to optimize the speed of our chat GPTs. Tweaking CPU power, upscaling server capacity, and refining the AI Model are all part of a process that, in the world of AI technology, is continuously evolving and improving.

Let’s examine these solutions more exhaustively in the following sections. We can transform these weighty challenges into powerful advantages with some know-how and agility.

The Impact of Model Complexity

Chat GPT’s slow speed isn’t a reflection of inefficiency. It’s the flip side of being remarkably intuitive and complex. I want to dive deep and shed some light on the model complexity of Chat GPT and how it impacts its performance.

Chat GPT’s model complexity lies at the intersection of its language and cognition mimicry – two inherently complex human characteristics. Mirroring these complexities necessitates layers of detail and nuance. This makes the AI model heavy and intensive in decoding words. Every word has potential meanings based on context and understanding, and this involves an intricate language model.

The complexity level of the AI model is reflected in the underlying technology, machine learning algorithms, and the data it’s been trained on. Now, bear in mind that vast amounts of information are processed at immense speeds in real time. Considering this, it’s no wonder the model demands a higher computational capacity.

This high computational demand also affects speed. The heavier the load, the slower the speed—that’s a universal phenomenon in tech and elsewhere. So, a part of Chat GPT’s slow speed can be attributed to the heavy computational demands.

However, the model’s complexity isn’t a problem. It’s an asset that enables Chat GPT to understand and produce nuanced, context-appropriate responses in real time. And it’s worth noting that this complexity is not static. Brilliant engineers consistently refine and evolve the model. Despite its growth, AI technology is still imperfect, and we’re constantly witnessing developments that optimize speed and efficiency.

Chat GPT’s complexity is a testament to the advanced AI technology it’s based on. Now, let’s shift our focus to the impact of server capacity on Chat GPT’s performance —another critical factor shaping your experience.

Just as the complexity of the model is key to understanding and improving the speed of the Chat GPT, server capacity is also key.

Server Capacity and Chat GPT Speed

We can’t discuss Chat GPT’s slow speed without addressing the elephant in the room: server capacity. It plays a pivotal role in obtaining greater efficiency and managing this advanced model’s computational demands.

Let’s dive a bit deeper. The concept of server capacity is quite straightforward. It’s the total data processing power that a server can handle at a given time. So, naturally, the more complex the task, the more server power it requires. And, as we’ve already established, the complexity of tasks undertaken by Chat GPT is high, meaning it will require a lot of server power.

With more server capacity, we could facilitate a faster processing speed. Yet, simply increasing the size of a server isn’t a one-size-fits-all solution. Larger servers are more expensive to maintain and may lead to new challenges, including energy efficiency and heat dissemination issues. So, refining server capacity is somewhat of a delicate balancing act.

Here’s a comparison table to give an insight into Chat GPT’s processing requirements:

| Task | Processing Requirement |

|---|---|

| Typing | Low |

| Chat GPT | High |

Let’s address another often overlooked factor: multi-tasking capability. In reality, servers seldom handle single tasks. Instead, they’re processing myriads of requests from multiple users simultaneously. Chat GPT, being a highly advanced system, must be designed to manage these multiple requests efficiently to maintain reasonable processing times.

Understanding server capacity’s role broadens our vision as we continue to explore ways to optimize Chat GPT’s performance without losing its intricacy and efficiency. Let’s carry this understanding forward as we move on to exploring other factors impacting Chat GPT’s speed.

Improving Chat GPT Speed

Improving the latter doesn’t involve a singular approach in light of the relationship between server power and Chat GPT’s speed. Efficiency is crucial in this scenario. One promising avenue is to target the server’s multitasking ability. By strengthening the server’s capacity to handle multiple user requests simultaneously, we can definitely surge ahead in enhancing the processing speed.

Diving deeper, the guiding principle revolves around optimizing the use of computational resources. Comprehending how each user request requires its own share of computational resources gives us a platform to boost server performance. A symbiotic relationship must be established where both the server’s capacity and the chat model’s complexity are taken care of.

But it’s worth highlighting that improving the server hardware isn’t a one-size-fits-all solution. As we aim to increase the speed of Chat GPT, considerations such as cost-effectiveness, energy efficiency, and heat dissemination issues need to be addressed concurrently. Solutions then have to be strategically drawn out to alleviate inherent challenges.

Investing in advanced cooling systems is a plausible step forward. Such a move can mitigate heat problems associated with high-performing servers. It’s beneficial to consider making changes at the model level, too. Implementations such as smarter task management and task-specific optimization can significantly lower computational requirements, lighten the load on the server, and, subsequently, accelerate the system’s processing capability.

Finally, let’s look at reducing complexity. A fine balance needs to be achieved to maintain the sophisticated conversational ability of Chat GPT while making it less resource-intensive. A variety of tweaking techniques can be employed to reduce model complexity without compromising its ability to deliver high-quality output.

Remember, the quest to enhance the speed of Chat GPT involves a combination of strategies, from hardware upgrades to software modifications. It’s more than just a quick fix; it calls for a comprehensive, thoughtful approach.

Conclusion

So, there’s more to boosting Chat GPT’s speed than just ramping up server capacity. It’s about smartly leveraging resources and striking the right balance between model complexity and server power. Don’t forget the importance of dealing with cost, energy, and heat issues. Investing in advanced cooling systems and task-specific optimizations can make a world of difference. Simplifying the model without compromising its conversational ability can be a game changer. In the end, it’s a mix of hardware and software tweaks that’ll help us win the race to Chat GPT faster.

What does the article recommend to improve the speed of Chat GPT?

The article recommends improving efficiency and multitasking capabilities and balancing server capacity with model complexity. Moreover, it is important to address cost, energy efficiency, and heat dissemination challenges.

How can computational resources be optimized?

Computational resources can be optimized by investing in advanced cooling systems and implementing task-specific optimizations.

What is the role of model complexity in Chat GPT’s speed?

Model complexity impacts processing speed. It’s suggested that model complexity be reduced while maintaining conversational ability; hence, striking a balance is crucial for optimal speed.

How can you address cost, energy efficiency, and heat dissemination challenges?

Investing in advanced cooling systems and applying specific optimizations for different tasks can address challenges related to cost, energy efficiency, and heat dissipation.

Does enhancing Chat GPT’s speed involve hardware upgrades alone?

No. Enhancing Chat GPT’s speed involves a comprehensive approach that combines hardware updates with software modifications.