Training GPT on Your Own Data: Evaluation, Testing, and Fine-tuning

Ever wondered how you can train GPT on your own data? It’s not as complex as you might think. I’ve spent years mastering this process and am eager to share my insights.

Training GPT models can unlock a world of possibilities. You’ll be able to tailor the AI’s responses based on the data you feed it, making it more relevant and effective for your specific needs.

Don’t worry if you’re new to this. I’ll guide you through the process, ensuring you understand every step. So, let’s dive in and learn how to train GPT on your own data.

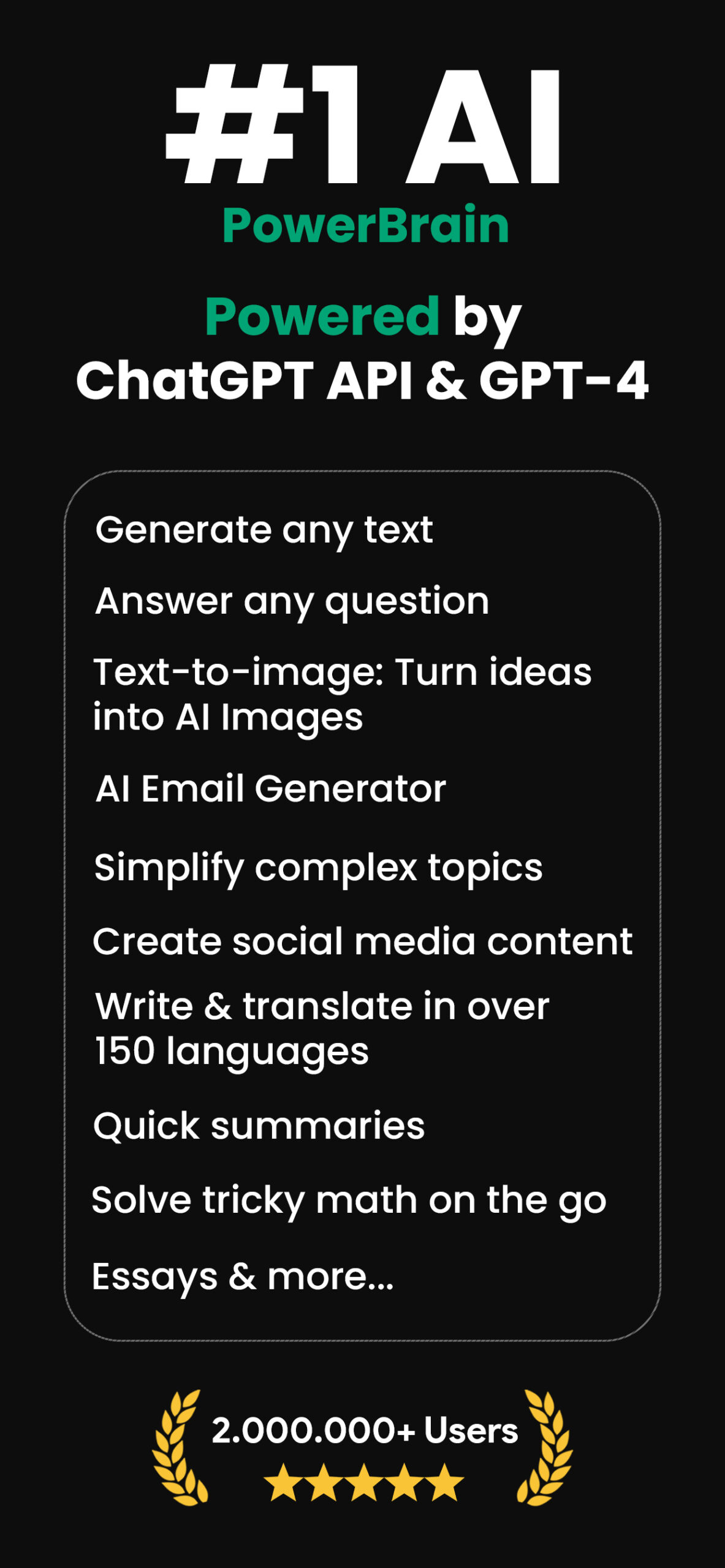

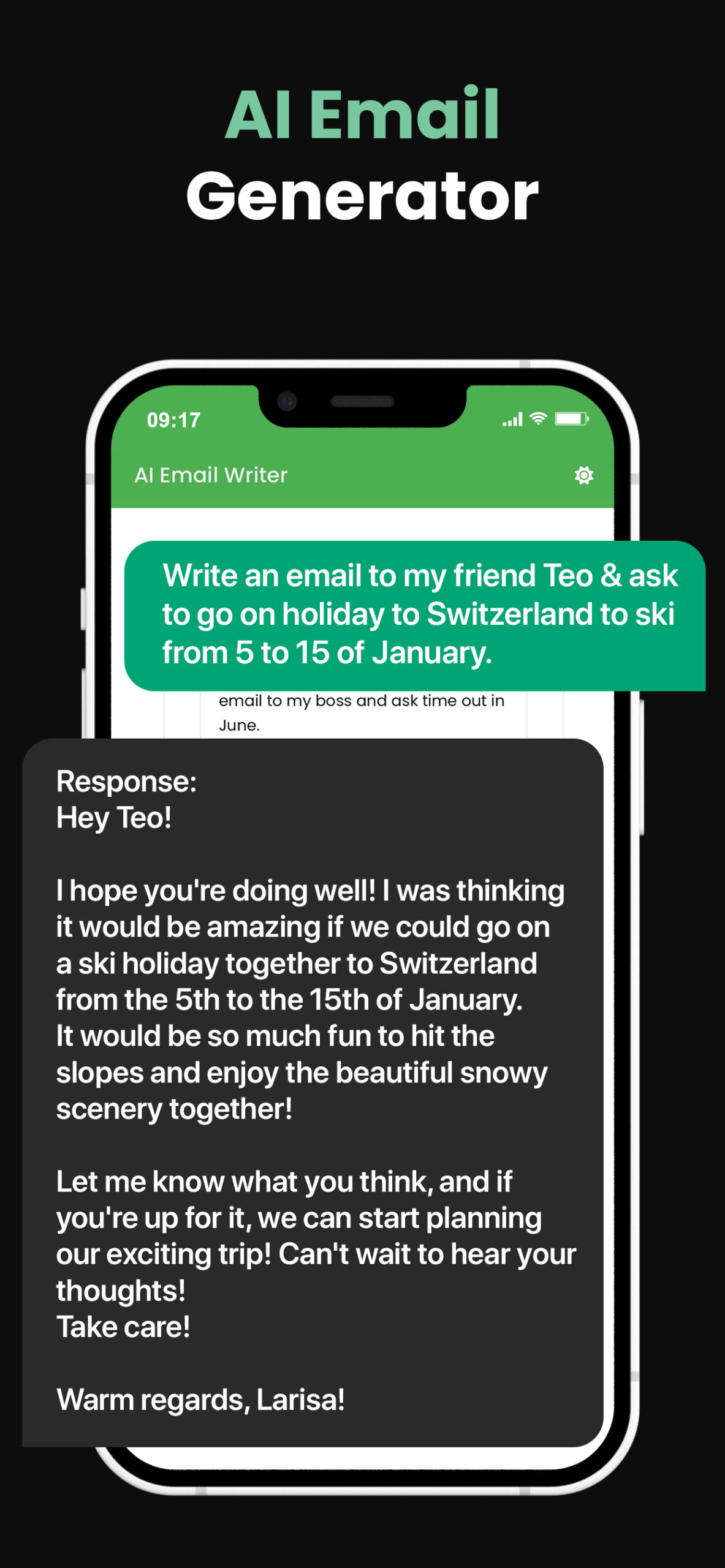

PowerBrain AI Chat App powered by ChatGPT & GPT-4

Download iOS: AI Chat

Download Android: AI Chat

Read more on our post about ChatGPT Apps & Chat AI

Key Takeaways

- Training GPT models allows AI’s responses to be tailored based on the data provided, making it particularly relevant for specific needs.

- Choosing the right data for training is critical, considering aspects like data relevancy, data diversity, data volume, and potential data bias.

- Data preprocessing is vital to ensure that AI models can efficiently interpret the data. It involves data cleaning, data formatting, and data categorization.

- The preparation of a suitable training environment is fundamental for GPT model training. This includes setting up the right hardware and installing the required software tools.

- Fine-tuning a pre-trained GPT model using transfer learning can be both time-saving and effective, involving considerably less computational resources compared to training from scratch.

- Evaluating and testing the trained model is crucial, using methods such as loss calculation, perplexity score, and case-specific evaluations.

Choosing the Right Data for Training

Choosing the right data for training GPT models isn’t just about what you have at hand. It’s about perfect alignment with the model’s purpose and realm of deployment. Mind that data is the foundation on which we build AI models. Hence, its quality significantly affects the final outcome. Here, we’ll take a deep dive into what to consider while selecting data to train GPT on your own.

Data relevance is a crucial aspect. Consider this: if you’re developing an AI to assist with medical inquiries, incorporating medical journals, textbooks, and related literature would yield the best results. The data you choose needs to reflect the intended tasks of the model.

Next comes Data Diversity. The more diverse your dataset, the more capable the GPT model is to understand and generate diverse responses. Imagine training your GPT model solely on 19th-century English literature. It is remarkably eloquent, yet it might struggle to comprehend contemporary slang or technical jargon! So, do include a variety of sources related to your model’s intended focus.

Read more

Chat GPT no restrictions

Connect ChatGPT to internet

Chat GPT no login

upload files to Chat GPT

ChatGPT Art

Data Volume is another pivotal point. The more the merrier, but quality should not be compromised for quantity. GPT models learn by identifying patterns across vast amounts of data. However, mindless data dumping can lead to overfitting and other performance issues.

Furthermore, keep an eye out for Data Bias. GPT models learn what they’re fed. Any inherent biases in the training data will be imparted onto the final model. It could hamper broad applicability and lead to unexpected and undesirable outputs.

Let’s summarize points to consider while selecting data:

- Data Relevancy

- Data Diversity

- Data Volume

- Data Bias

Preprocessing Your Data

Transitioning from the prior discussion about data selection, the next step is preprocessing the data for your GPT models. I can’t overstate how significant this part is. It needs meticulous attention to correctly clean, format, and correctly categorize the data.

First, data cleaning is the process of spotting and correcting (or removing) corrupt or inaccurate records from the dataset. It’s imperative as it weeds out unnecessary information. This includes empty fields, inaccurate data, or obsolete records.

Next, data formatting, an equally essential step, ensures the data is readily available for your AI models. Without formatting, your GPT models may have difficulty understanding the information contained in the data. An effective strategy to format your data is to tokenize it, breaking it into more manageable sections or ‘tokens,’ enabling the model to process the data more efficiently.

Lastly, the data categorization process organizes data items into a predetermined set of categories, thus making it easier for GPT models to understand, analyze, and respond.

Considering these preprocessing techniques, let’s delve further and discuss a couple of practical scenarios using markdown tables. Table 1 showcases a sample dataset before and after the data cleaning process; Table 2 demonstrates how tokenization works in data formatting.

| Table 1: Data Cleaning | |

|---|---|

| Before Cleaning | After Cleaning |

| John Doe,_ 23, London, Programmer_ | John Doe,_ 23, London, Programmer_ |

| 17, “”, “” ,Lawyer | (Data Removed) |

| Jane Doe, 35, New York, Doctor | Jane Doe,35, New York, Doctor |

| Table 2: Tokenization | |

|---|---|

| Before Tokenization | After Tokenization |

| The quick brown fox jumps over the lazy dog. | ‘The’, ‘quick’, ‘brown’, ‘fox’, ‘jumps’, ‘over’, ‘the’, ‘lazy’, ‘dog.’ |

As evident from these tables, preprocessing plays a pivotal role in setting the stage for GPT models to deliver optimal results by making the data understandable to these AI models. The next section will delve deeper into the next steps in training GPT models, focusing on error analysis and model fine-tuning.

Setting Up Your Training Environment

After understanding the nuances of data preprocessing, the next pivotal step is setting up the training environment for your GPT models. This process is fundamental and can often determine the success of your model.

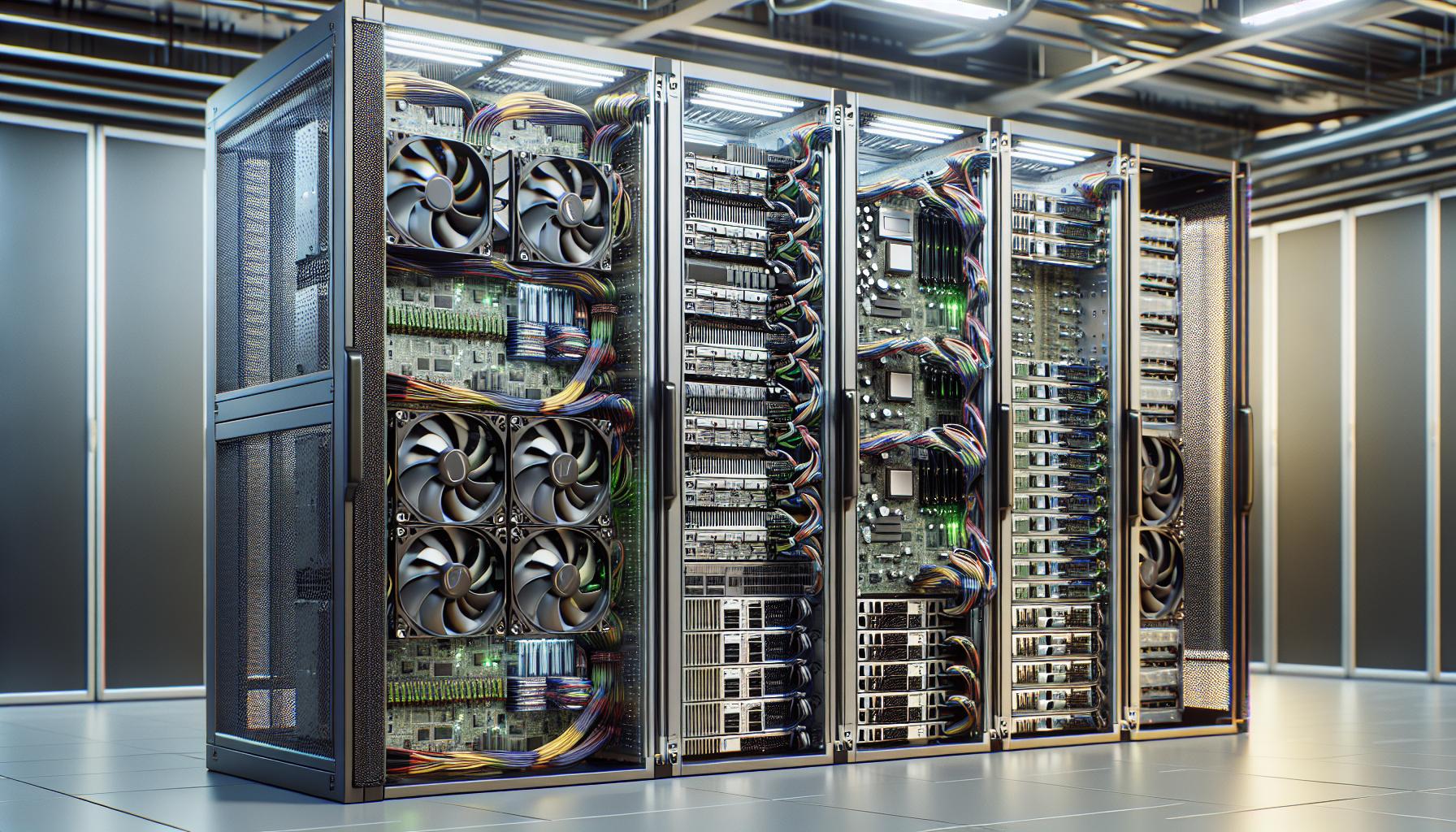

Consider the parameters of your environment carefully. To run the transformer models like GPT, you’re going to need a hardware setup that can handle it. Access to GPUs can considerably speed up the training process, making an enormous difference in the overall time taken to train models.

Here’s a sample overview of the hardware I use:

| Hardware | Specs |

|---|---|

| GPU | NVIDIA Tesla V100 |

| CPU | Intel Xeon E5 |

| RAM | 64GB |

Depending on your budget and requirements, you might opt for cloud-based GPU solutions like Google’s Colab or AWS’s Sagemaker instead of investing in your own hardware.

Moreover, having the required software tools in place is equally essential. Python 3.6 or above is imperative. The need for packages like Hugging Face’s Transformers library, NumPy, and PyTorch cannot be understated. Remember, keeping these tools updated will lead to optimal performance.

NVIDIA’s CUDA toolkit is another tool you must have in your armory. It allows for GPU-accelerated training, leveraging the processing power of your GPU to the maximum.

If you’re using cloud-based solutions, consider setting up a virtual environment. It isolates the project dependencies and doesn’t tamper with the system’s environment.

To guide you through this, here are the essential steps to follow:

- Install Python 3.6 or above and ensure its proper functioning.

- Install the necessary Python packages through pip – Hugging Face’s Transformer, NumPy, and Pytorch.

- Install the latest version of the CUDA toolkit, if applicable.

- Configure a virtual environment.

Remember, the ultimate aim is a smooth, unhampered training process. With the environment set, we’re ready to proceed with our GPT model’s actual training. Let’s dive right into error analysis and model fine-tuning next.

Fine-tuning the GPT Model

After we’ve set the stage and readied our training environment, the next phase on our journey to train a GPT model on our data is the fine-tuning process. You might ask: “Why the fine-tuning?” It’s because pretraining a GPT model from scratch isn’t always practical or even necessary.

Most large-scale language models like GPT need colossal amounts of data and computational resources to train from scratch. Google’s GPT-3, for example, boasts a whopping 175 billion parameters. Just imagine the time, money, and resources needed to train such a model from the base level. No easy feat, right?

Let me introduce a practical alternative to you: Transfer learning. It is a method where a pre-trained model is fine-tuned on specific tasks. Instead of starting from scratch, we’re leveraging what the model has already learned.

A fine-tuned GPT model can offer excellent results with considerably less expense and computational time. The process involves using the lower layers of a pre-trained GPT to learn the general language understanding and tuning the higher layers for our specific tasks. Think of it as building a tower: the lower floors act as the strong foundation (the pretraining), and the top floors (fine-tuning) we can design according to our preferences.

Here are the essential steps:

- Choose a pre-trained GPT model that’s apt for your objectives. The Hugging Face’s Transformers library is a gold mine for this.

- Load your customized dataset. Ensure the data is tokenized effectively and compatible with the model’s architecture.

- Initiate the training process. Remember that the learning rate and the number of epochs play a pivotal role in the model’s performance. Adjust these parameters as needed.

Thus, with these steps in mind and careful attention to detail, fine-tuning a GPT model can be a powerful tool in your AI repertoire. It’s all about striking the right balance and pulling the right strings to obtain that optimal model performance. Keep yourselves tuned.

Evaluating and Testing Your Trained Model

Once you’ve got your GPT model trained with your dataset, the next important step to take is evaluating and testing. This phase can’t be rushed because it’s a deciding factor for the effectiveness of your model. Expect to encounter bugs, understand them, and fix them through your evaluation process.

Now, let’s dive into how to actually evaluate a GPT model.

Loss calculation is the go-to method for a rudimentary yet essential assessment – it’s simple yet powerful. In essence, it calculates the difference between the predicted and actual values. The lower the loss value, the better your model’s performance. A markdown table shows the relation between the loss value and the performance.

| Loss Value | Performance |

|---|---|

| High | Poor |

| Low | Excellent |

After loss calculation comes the Perplexity score. It throws light on how well the model understands the text. Lower perplexity values represent higher certainty in predicting the next word in a text. This adds a fine layer of evaluation on top of loss calculation and introduces a more nuanced perspective in assessing a model’s performance.

Evaluating your model isn’t just about looking at numbers – it’s also about understanding the results and adapting your model where necessary. Using case-specific evaluations is a sure-shot way to test if the model is efficient and accurate for your specific project. For example, create some back-and-forths that resemble real conversations if you’re developing a chatbot.

Conclusion

So, we’ve walked through the key steps to train GPT on your own data. We’ve seen the importance of the evaluation and testing phase to gauge the model’s effectiveness. We iron out bugs and issues using loss values and Perplexity scores during this phase. We’ve also learned the importance of tailoring the model to specific use cases, such as crafting realistic chatbot conversations. It’s clear that fine-tuning GPT with your data isn’t just about the training process. It’s about understanding and optimizing the model’s performance post-training. By doing so, we can ensure our model isn’t just effective, efficient, and accurate.

What is the purpose of evaluating and testing a GPT model after fine-tuning?

The purpose of evaluating and testing a trained GPT model post-fine-tuning is to assess its effectiveness, spot any bugs or issues, and subsequently address them. The evaluation process helps to ascertain the model’s understanding and performance in handling text data.

How is the evaluation process done?

The evaluation process involves calculating loss values and Perplexity scores. These metrics serve as indicators of the model’s understanding and performance of the text, offering insights into its accuracy.

Why is the adaptation of the model important?

Adapting the model based on specific use cases, like designing a chatbot, is important in guaranteeing its efficiency and accuracy. It ensures that the model can create realistic conversations pertinent to the project once it has been implemented.